| |

| Search all USDA |

|

Aerial Vegetation Survey

Ryan Becker, Project Leader

About Image Resolution Actual image resolution of aerial photos is dependent on a variety of factors. Most are true for any aerial imaging technique. Some, such as parallax, may be negligible for satellite imagery, but of considerable concern for low-flying aircraft. The following description uses the Bat mission in Hawaii as an example to illustrate the various factors of importance to image resolution. Factor 1: Initial (Maximum) Resolution Digital imagery permits a straightforward method to determine initial resolution of aerial imagery. Only three numbers are required: Camera pixel dimensions, lens angular field of view (which is related to f-ratio), and camera altitude when the image was captured. As an example, the Bat flew with a five-megapixel digital camera in Hawaii. The largest pixel dimension this camera offered was 2580 pixels. The angular field of view captured by this camera without any zoom applied is 53.1 degrees, and flight altitude varied between 500 and 1,000 feet above terrain. Some geometry using the altitude and angular field of view reveals that the camera took pictures of swaths ranging between 500 and 1,000 feet wide; given that this swath was spread evenly over 2580 pixels, each pixel contained between 0.22 and 0.44 feet, or 2.5 and five inches, of swath. Zooming the camera decreases the area imaged in each picture but increases the possible resolution. Factor 2: Degrading Elements A variety of factors may reduce an image's actual resolution. These are important to consider when planning a mission, as some can be mostly avoided with careful planning. Others, such as the effect of parallax, cannot. The camera's operating characteristics, particularly its shutter and f-stop settings, require special attention to detail. Fast shutter speeds become critical as altitude drops, because relative ground motion simultaneously increases. Pictures taken with unacceptably slow shutter speeds appear blurred or streaked in the direction of flight. See here for an example. High f-stop settings contribute to this problem by limiting the light reaching the sensor, creating a need for longer exposures. Cameras should have their f-stops manually set to a low setting, or their shutter exposure times set to 1/500 second or faster. Aircraft disposition at the moment of exposure plays a vital role in determining the actual resolution, as well as the general quality, of the image. Airspeed and altitude intermingle with factors like pitch, roll and yaw to yield a complicated collection of influences on the image. These influences cause blurring and shift the center of the picture off vertical. While the Bat records the instantaneous values of altitude, airspeed, roll, pitch, and yaw so software can later correct image rotation and placement for these factors, this shift irreversibly worsens the effect of parallax. Parallax refers to two different phenomena in this context. The strict definition refers to the perceived change in a given object when imaged from two different locations. The term is also used here to explain the difference in properties between different areas of a single image. The strict definition is illustrated by figure 1. Notice how the left-leaning branch of the tree is clearly visible to the plane on the left, while the tree trunk obscures the branch from the view of the plane on the right. The two pictures the airplanes collect will appear to show two different trees even though they both imaged the same one.

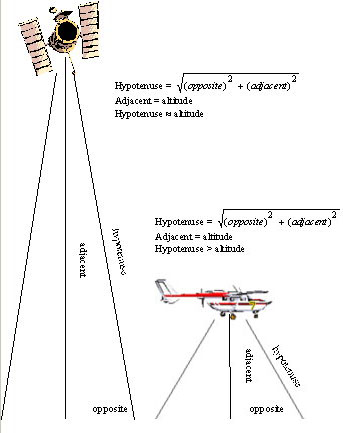

The second definition of parallax requires some math to understand. Consider the satellite and plane of figure 2. Notice that the hypotenuse shown in the satellite's field of vision is approximately equal to the altitude. The plane's hypotenuse is greater than the altitude. This means that objects on the edge of pictures taken by the plane will appear smaller than those in the middle because they are physically farther away from the camera. Now consider the plane in figure 3. The pitch up has led to a dramatic increase in the distance from objects on the leading edge of the picture to the plane. Extremely expensive imaging systems for manned aircraft employ gyroscopically stabilized mounts that allow the camera to maintain a perfectly vertical orientation despite moderate turbulence and deviations from a straight, level flight path.

Satellites are inherently capable of taking almost perfectly stable images, but they must do so while looking through the entire atmosphere. Clarity and color depth of satellite images inevitably suffer as a result, and visible-light images are useless when the target area is obscured by clouds. While image stability is a formidable challenge to low-altitude UAVs, atmospheric and even cloud interference can be virtually ignored. The Bat returned clear images from beneath layers of clouds during two flights in Hawaii, while some, like figure 4, suffered degradation when the Bat flew directly through a cloud.

The Bottom Line So, are UAV-collected images good enough to use? It depends on the intended application. Given current FAA regulations, each individual image is basically limited to a maximum area of seventeen acres; when the overlap required for orthorectification is subtracted, this drops to about five acres. The orthorectification process yielded useful mosaics when carried out manually on groups of pictures with a common element, such as a river or road. Other images without such common elements ended up having noticeable discontinuities even when manually mosaicked. Mosaicking was only successful when a basemap was used for initial placement of the raw images. While collecting the individual georeferenced images costs far less than satellite data or manned aerial imaging, the complexity involved in mosaicking increases the price for fully orthorectified, mosaicked data to about the same cost as satellite data.

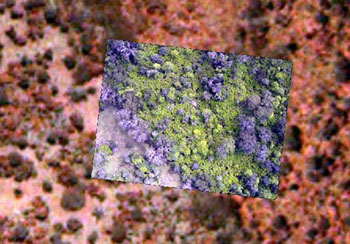

The improvement over satellite imagery is striking. Figure 5 shows the improvement in detail over Emerge data, which offers approximately one-meter resolution. Click here for an uncompressed image of raw Bat data. Note that individual leaves occupy several pixels. Efficient, automated orthorectification and mosaicking of UAV-collected imagery is unlikely until the FAA allows UAVs to operate more than one thousand feet above ground level. The ephemeris data associated with each image is accurate enough to properly locate features to within ten meters, so even raw pictures retain value as georeferenced imagery. This type of data is ideal when high-resolution images are required in remote areas and manned aerial surveys are impractical or prohibitively expensive. |

|